a paradox with data abundance

garbage in, garbage out

good data into an open system, still a lot of garbage out

why?

a message about an occurrence or phenomenon is constructed from data about that occurrence or phenomenon

the data about an occurrence or phenomenon are not the same as that occurrence or phenomenon

these data are a selection from aspects of this occurence or phenomenon, with many other data selections possible

this selective process may be shaped by coincidence, opportunity, belief, interest, policy, expectation, judgement and other filtering mechanisms

so the data collected give a selective snapshot of the reality of an occurence or phenomenon, with many other alternative snapshots possible

a message needs interpretation, and data about an occurrence or phenomenon can be interpreted in many different ways

there are many, more or less correct ways to interpret and report an occurrence or phenomenon, you may consider them supplementary

and call this "supplementary pluriformity"

there are many, more or less incorrect / distorted / wrong ways to interpret and report an occurrence or phenomenon

you may call this "contradictory pluriformity"

there is no sharp boundary between supplementary pluriformity and contradictory pluriformity but an overlap, a gradual transition

it follows from observation, and confirmed by the second law of thermodynamics, that there is way more contradictory pluriformity around

because of this and because of the overlap, good and correct information is prone to contagion by incorrect information

because of this there is way more incorrect / distorted / wrong information around than correct information

as this is a natural and unavoidable course of events, most of this distorted and wrong information happens unintentional

also, the further from the source (space or time or scale) and the more transitions / connections / amplifications / translations / interpretations between an occurrence or phenomenon and the ultimate message, the more bias and distortion and noise is introduced in the message

fakenews (intentional distorted and wrong) is just a small part of all wrong information around

good correct information is only a small part of all available information

as good correct information has great value and is a rare commodity, it has mostly a high price on the information marketplace and is not easily available – this augments the rarity of good information

it follows from supplementary pluriformity that a good and correct form of information about an occurrence or phenomenon is just one of many possible good and correct forms of information

it follows also that in the “big data” approach the above complications are augmented and most information produced this way is distorted and incomplete – maybe some good data goes in but still a lot of garbage comes out - because "big data" itself is an open system

so in spite of the tremendous power of computers and data-processing and abundant observation and registration and statistical manipulation of all kind of data about occurrences and phenomena, it follows from the above reasoning that good and correct information is still a rare commodity

this is a paradox of data-abundance - data may be instrumental, but only so far, and they cannot replace real phenomena

witness the contortions of the scientific enterprise

a sobering realization, but a liberation as well

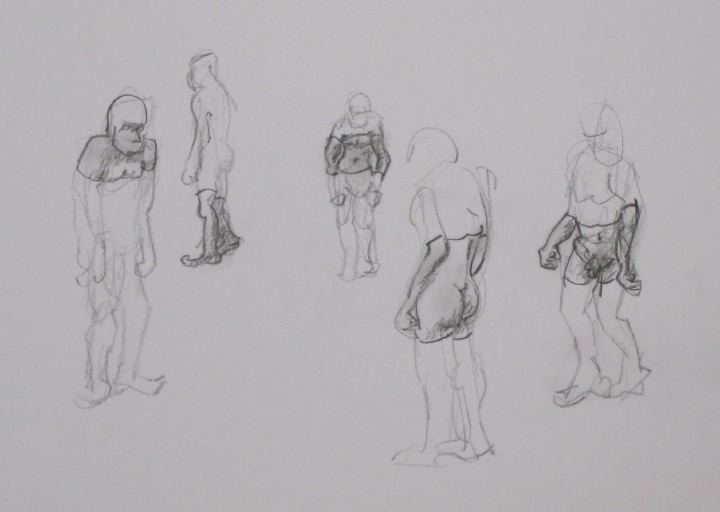

as nothing beats reality - the direct experience of physical existence and nature